Introduction

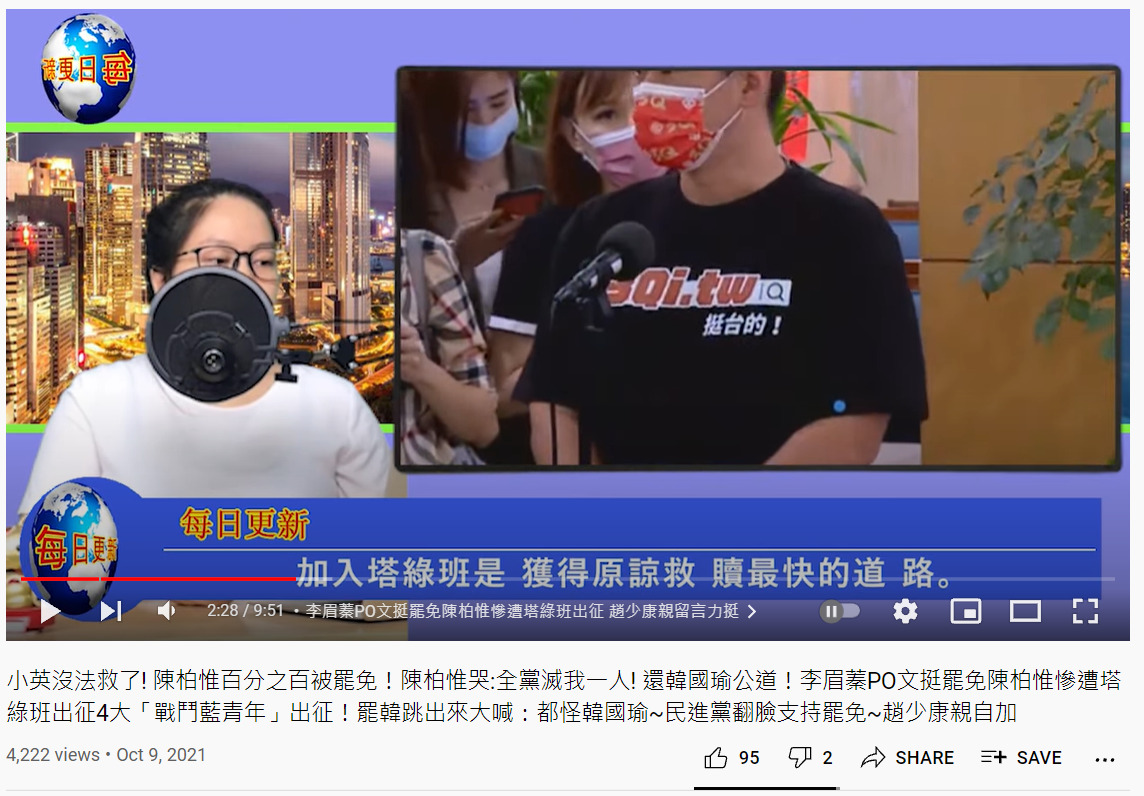

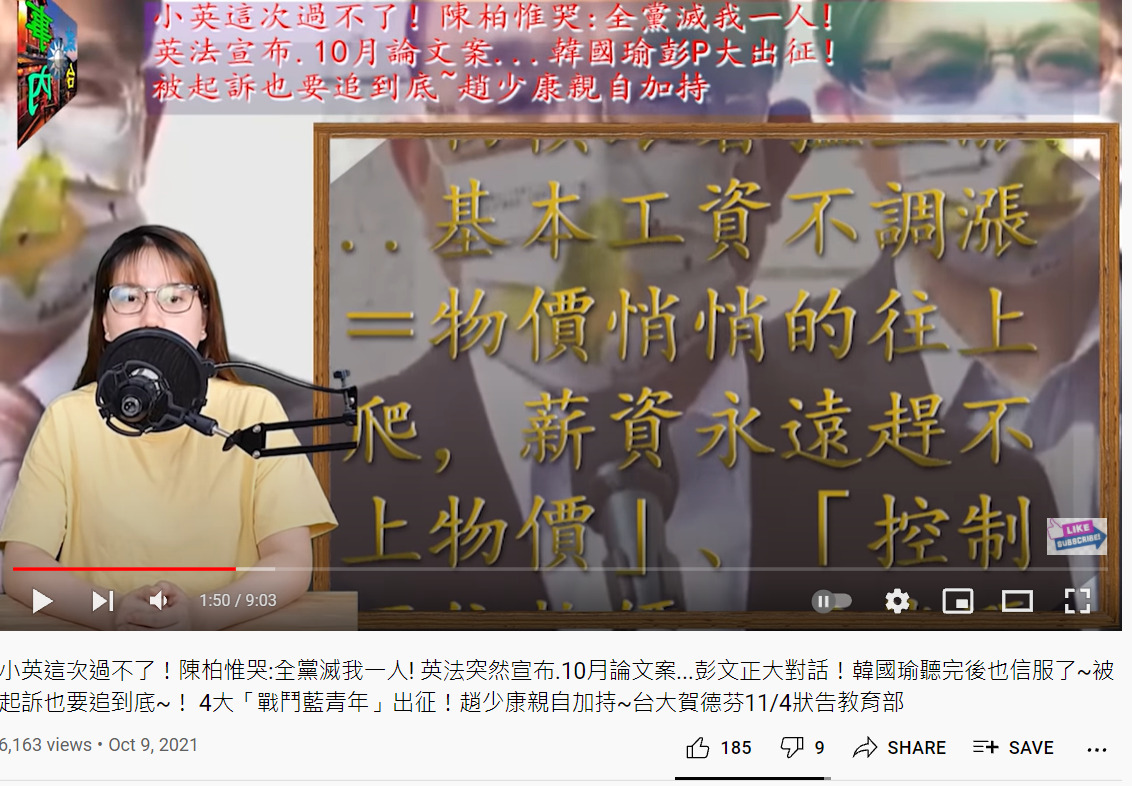

This article illustrates a new type of information operation: “puppet-anchor” channels on YouTube. What is a puppet anchor? Figure 1 shows screenshots of four different puppet-anchor channels streaming before October 10, 2021. In these four videos, an anchor sits on the stage and introduces a news story. The transcript is presented at the bottom (or in the middle) of the screen, and a logo can be seen swirling in the top left corner. These anchors act unlike real humans: their body movements repeat every few minutes and across different videos (see the bottom two screenshots from two channels in Figure 1). Their voices are machine-generated (similarly to voices produced by Google Translate), and the content they read is all from China-affiliated news articles. Their mouths are covered by a large microphone, so the audience cannot determine whether their lips and voices match. These human-like anchors are copy-pasted from other videos and only serve as instruments to relay China-affiliated news content. Thus, they are puppet anchors.

Research Method and Data Collection

How many puppet anchors are there on YouTube? During the data collection period, from June 2020 to October 2021, this article identified eight YouTube channels sharing four puppet anchors through a process similar to snowball sampling. These eight YouTube channels were identified manually. The first channel was found in June 2020, and the remaining channels were found by the following methods: (1) Some channels were mentioned in other channels’ “Channels” or “About” sections. (2) Some channels have similar descriptions and can be searched by specific keywords (e.g., translated, “…this channel’s view count is recently covered by dark clouds, please watch this to help it find the sunshine again!”). (3) Some channels were found through searching terms related to Chinese propaganda on YouTube (e.g., “Biden just surrendered!”, “It just happened! China wins again!”, “Tsai Ing-wen cried for help!”). We then checked each video for the identifying features listed above to determine if the videos contained likely puppet anchors.

Through October 10, 2021, we successfully identified eight channels containing puppet anchors. Subsequently, we used a “tuber” library to crawl the information of these eight channels, including the list of all videos, view counts, descriptions, hashtags, publication dates, titles, comments, like counts, and video links. Interestingly, the description section of all videos includes the full text of their audio scripts. Therefore, we were able to analyze the content of the videos directly through the descriptions. We also downloaded the YouTube videos and analyzed the variables through descriptive analysis. The publication date was transformed from GMT to Beijing’s official time zone (Palmer 2019).

Results

Finding 1: The emergence and spread of puppet-anchor videos

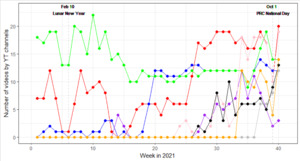

Through October 10, 2021, the overall view count of the eight puppet-anchor channels equaled 31.5 million, and the number of videos uploaded equaled 2,148. The number of puppet-anchor channels increased from three in 2020 to eight in 2021. Screenshots of these eight puppet-anchor channels can be found in Appendix A4. Figure 2 shows that the number of videos uploaded increased from around twenty per week in early 2021 to ninety per week in October 2021. Evidence that these videos were also spread through Line and Facebook can be found in Appendix A1.

Finding 2: Evidence of potential China-related coordination

There are three lines of evidence that these channels coordinated with each other, and the pattern suggests that these channels may originate from China. The first piece of evidence is the time of day that the videos were published. In Figure 3, the left column shows the distributions of publication times (transformed to Beijing’s time zone and in military time format) for the eight channels in 2020, while the right column shows the publication times in 2021. (In 2020, only three of the channels published puppet-anchor videos.) The first video was usually uploaded before noon, and the second was published around 5 pm. The time distributions are similar to those of previous coordinated behaviors of the Chinese cyber army on Twitter (A. H.-E. Wang et al. 2020). Taken together, the publication times suggest that the videos were created by employees during working hours, not by amateurs after class or work. Moreover, the noon–2 pm relative silence could be the result of the standard lunch break of Chinese officials (Palmer 2019; A. H.-E. Wang et al. 2020).

Interestingly, all three established channels changed their publication pattern simultaneously at the start of 2021, and the other five emerging puppet-anchor channels also followed this new pattern. The right column in Figure 3 suggests that these channels uploaded videos before noon, around 4 pm, and around 8 pm. The same break remains. The same pattern and the same change of pattern suggest that these channels are coordinated. YouTube’s decision to delete all these channels supports this inference.

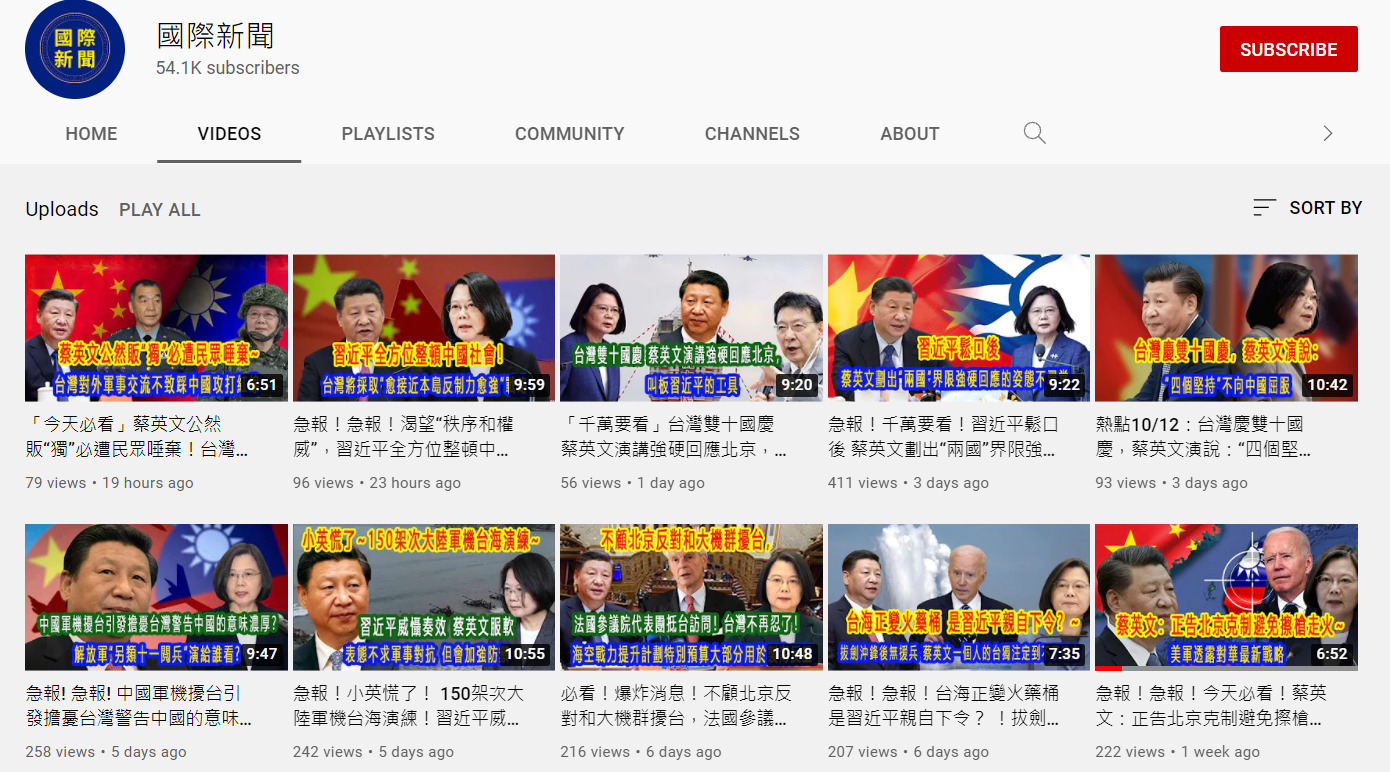

The second line of evidence linking China to the content coordination is that these channels promoted the same pro-China topics in the same time frames. Figure 4 shows three major topics covered by these channels in late 2021; each color represents a different channel, the X-axis shows the week and the Y-axis measures the number of videos mentioning specific topics. Between Week 28 and Week 36, all channels attacked the Medigen Vaccine, a COVID-19 vaccine developed in Taiwan with help from the United States. Between Week 35 and 38, all channels shifted to support Chang Ya-chung, an extremely pro-China KMT chairmanship election candidate who openly supports immediate unification with China. After Chang lost the KMT chairmanship election on September 25th, the channels no longer mentioned him. Similarly, in Week 39 and Week 40, all channels suddenly focused on 3Q (Bo-Wei Chen), a pro-independence legislator whose recall election took place on October 23rd. Importantly, Chang Ya-chung was not a salient topic in Taiwan but was popular in China and among overseas Chinese. So, the coordinated coverage on Chang Ya-chung may indicate that these channels are influenced more by China and overseas Chinese audiences.

The last (and perhaps weakest) type of evidence of China’s involvement can be found in the video descriptions and publication dates. Although most of the video descriptions and transcripts are written in traditional Chinese (which is mainly used in Taiwan and Hong Kong), many simplified Chinese characters are also found (mainly used in China and many overseas Chinese communities). Additionally, several terms are only used by speakers/writers of simplified Chinese. For example, in traditional Chinese the term for a video is “Ing-Pien,” while in simplified Chinese it is “Shih-Ping.” In addition, one channel self-claimed that it was located in Hong Kong. (Other examples can be found in Appendix A5.) Additionally, there was a noticeable decline in the number of videos uploaded in mid-February and the first week of October, and the decline existed across all of the channels. In 2021, the Chinese (Lunar) New Year occurred in mid-February, and October 1st is the National Day of the People’s Republic of China. People in China usually have a week off during these two periods, but people in Taiwan do not. However, simplified Chinese terms are used and the Lunar New Year is celebrated by Chinese people overseas, so this last type of evidence may not be able to exclude the possibility that the videos were from overseas Chinese content firms, such as those found in Malaysia (Liu, Ko, and Hsu 2019).

Discussion

Puppet anchors in China’s information operations

These puppet anchors may serve as one of the new tools in China’s information strategy. Previous studies have shown that the Chinese government employs a broad-stroke social media strategy focusing on mass messaging, encouraging self-censorship rather than direct confrontation, and creating an environment of uncertainty. Direct censorship on the internet has proven ineffective as journalists frequently develop tactics to circumvent direct measures, such as filters or blockers, and this risks elevating debates rather than quieting them (Xu 2015; Lorentzen 2014). Instead, China has developed indirect methods to address anti-government sentiment online. China intentionally keeps the criteria used to decide what online activity prompts government crackdowns opaque, leaving activists unclear as to how far they can push, which in turn motivates them to self-censor to ensure they do not cross a line (Stern and Hassid 2012; Xu 2015). Most notably, China employs “strategic distraction” through mass posting efforts, such as the famed 50c army, which generates positive messaging either passively by creating noise to lessen the visibility of anti-government posts, or actively by distracting from controversial discussions and changing the topic (King, Pan, and Roberts 2017). We propose that puppet anchors offer an alternative low-cost, easy-to-produce application of this kind of distraction strategy for video social media, enabling the same mass posting technique to create noise and shift the discussion away from content that is deemed unfavorable to Chinese government interests.

The content of the videos considered here focused on attacking the ruling party in Taiwan, building up the legitimacy of China, and delegitimizing U.S. involvement with Taiwan. Mass produced videos that appear as legitimate news and use clickbait keywords to achieve a high number of views would serve on the mainland the same strategy as detailed above, but with the reverse goal of elevating negative discussions and anti-Taiwan-government sentiment.

Recently, A. H.-E. Wang et al. (2020) found that the text-image on Twitter was more important than the 140-word post in explaining the discourse of the Chinese cyber army as well as the criteria for censorship by Twitter. We also suggest that China may promote its propaganda through YouTube videos, not just words or text-images. Moreover, the YouTube link implies the likelihood of cross-platform coordination as one can share the link containing the puppet anchor in private messaging apps or on Facebook. We offer evidence in Appendix A1 that these puppet-anchor videos were shared through Facebook and Line. If a viewer sees the same anchor across channels (or in multiple videos), the increased familiarity may result in a greater willingness to accept the content (Lu and Pan 2021).

Advantages of puppet-anchor videos

The first advantage of a puppet-anchor video is its low cost. All content can be reused (and the duplicated format implies the likelihood of auto-generation). For example, Python can be used to automatically download trending Tiktok videos, combine several of them, create a thumbnail, and then upload it to YouTube.[1] China has previously employed this mass generation technique in its social media strategy through mechanisms like the 50c army, as discussed above.

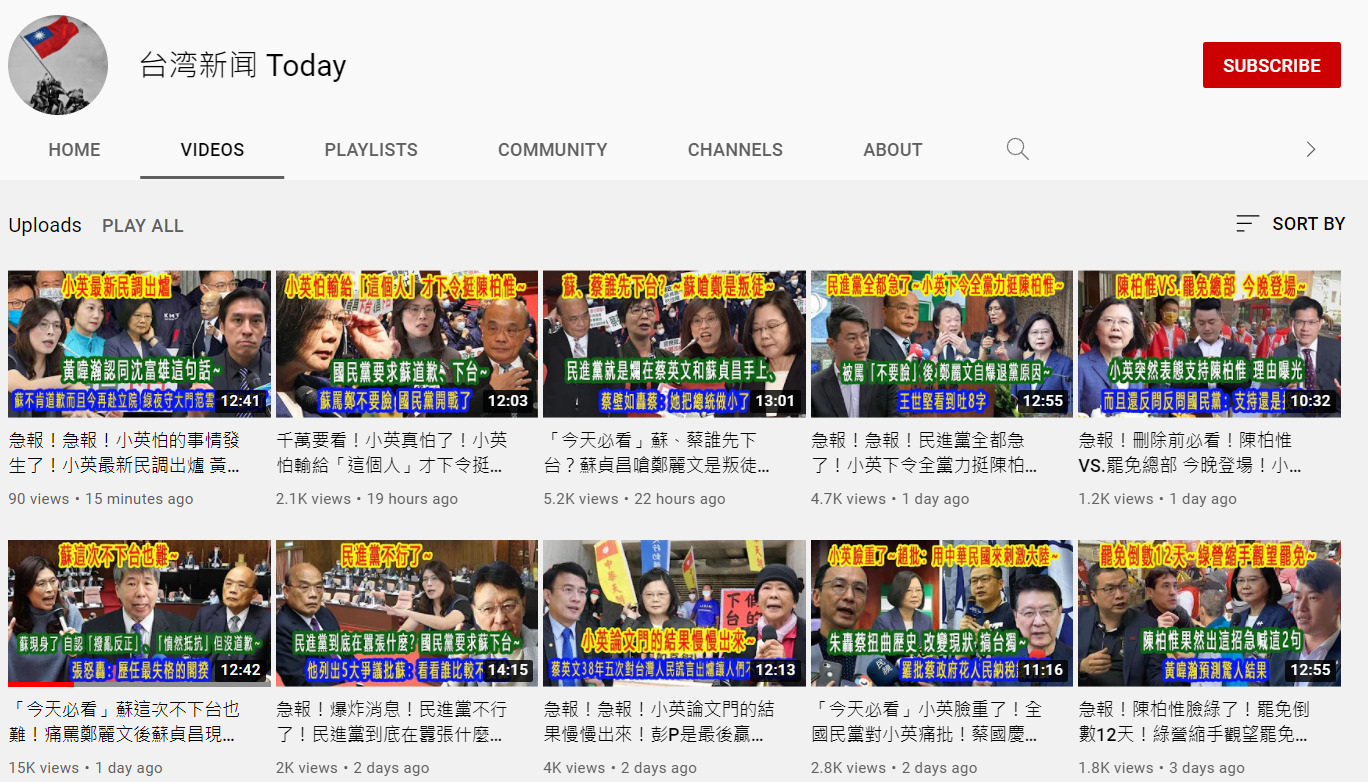

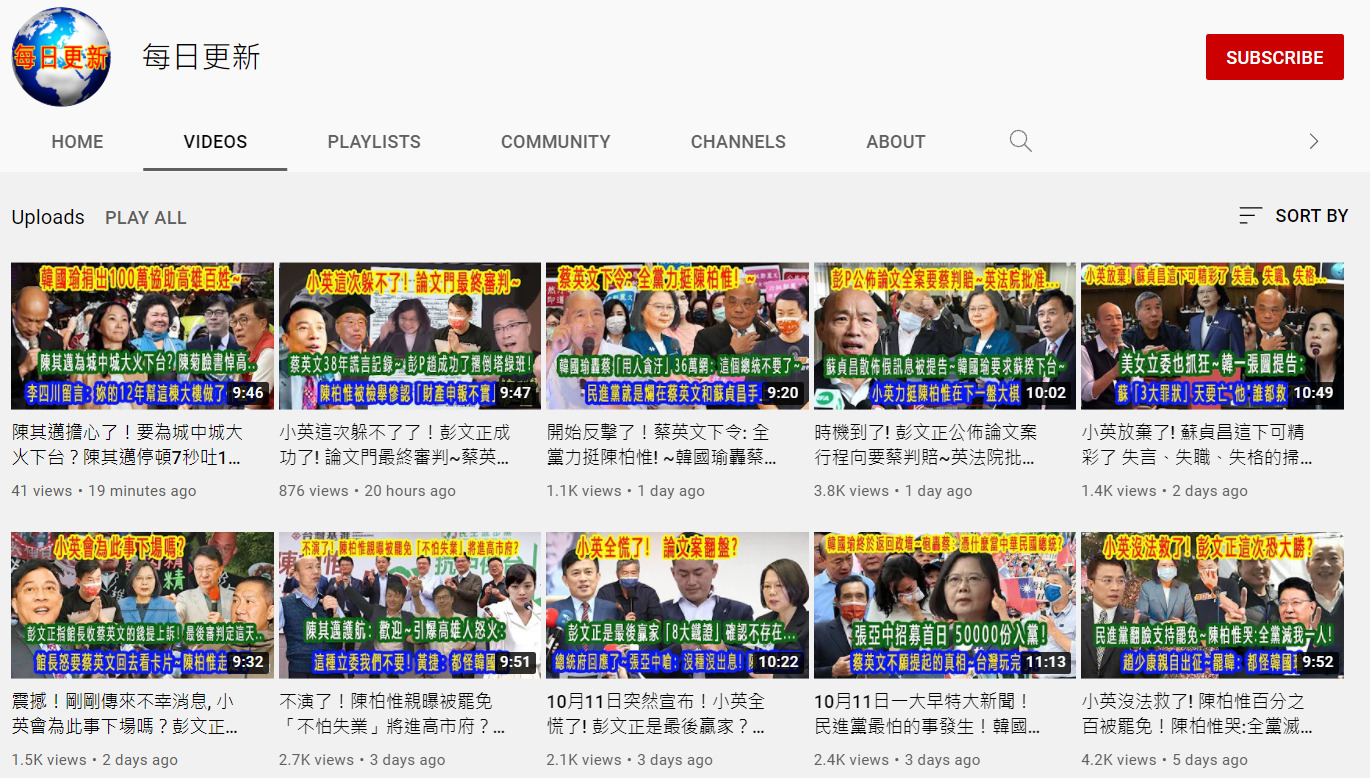

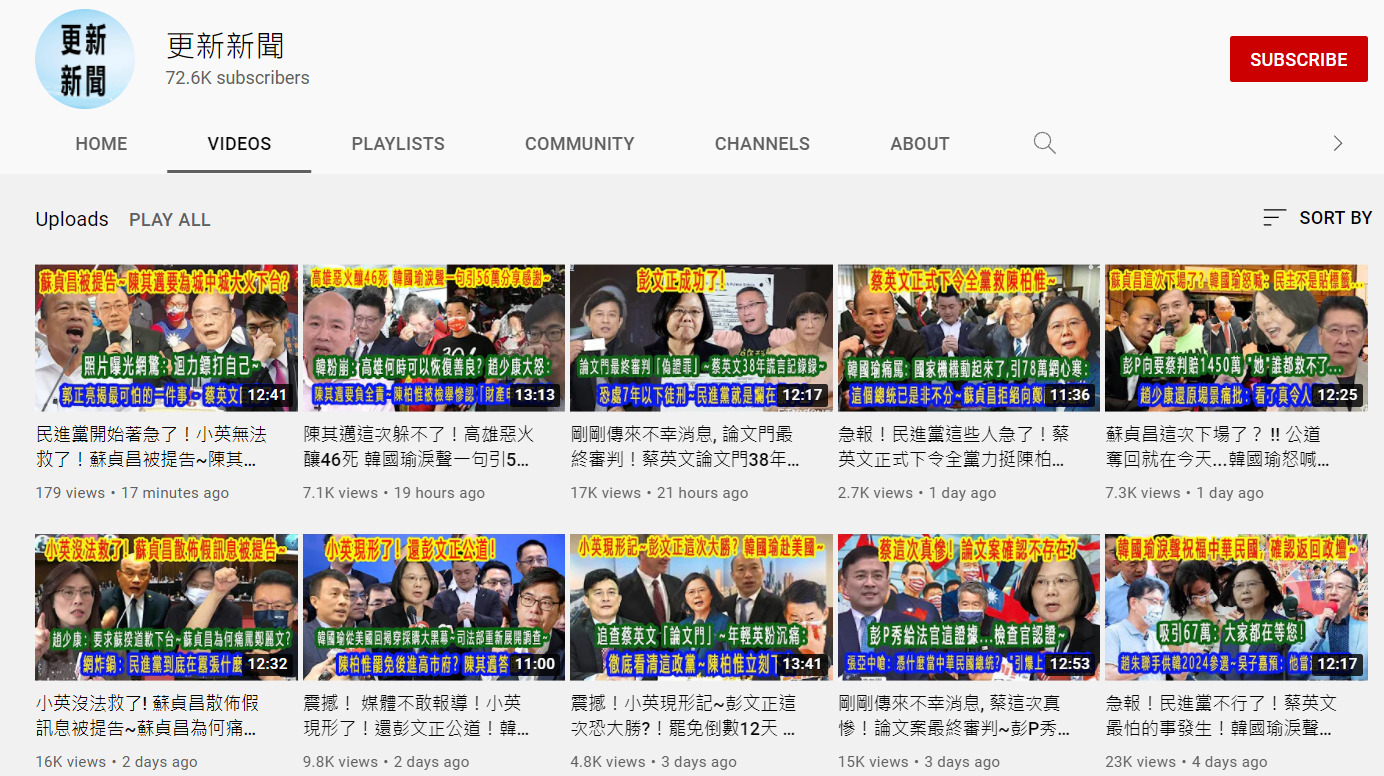

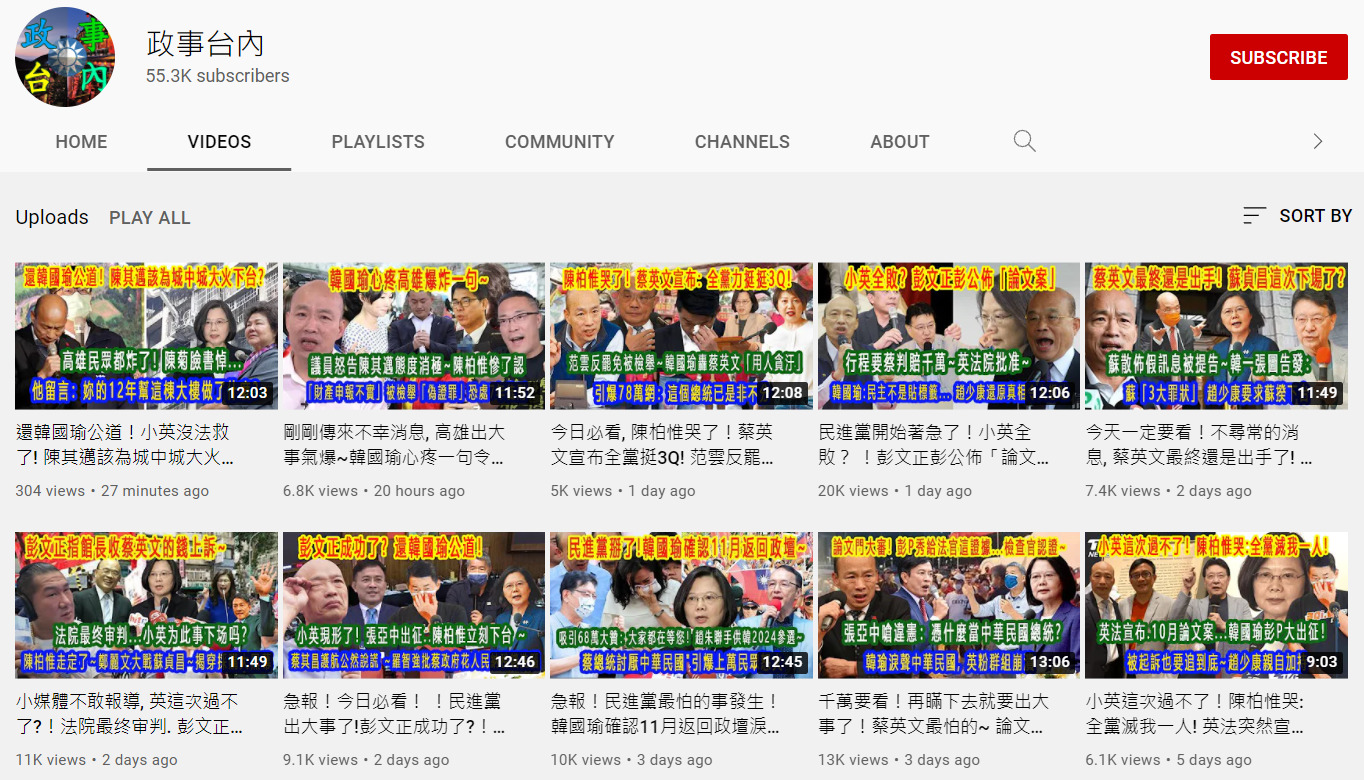

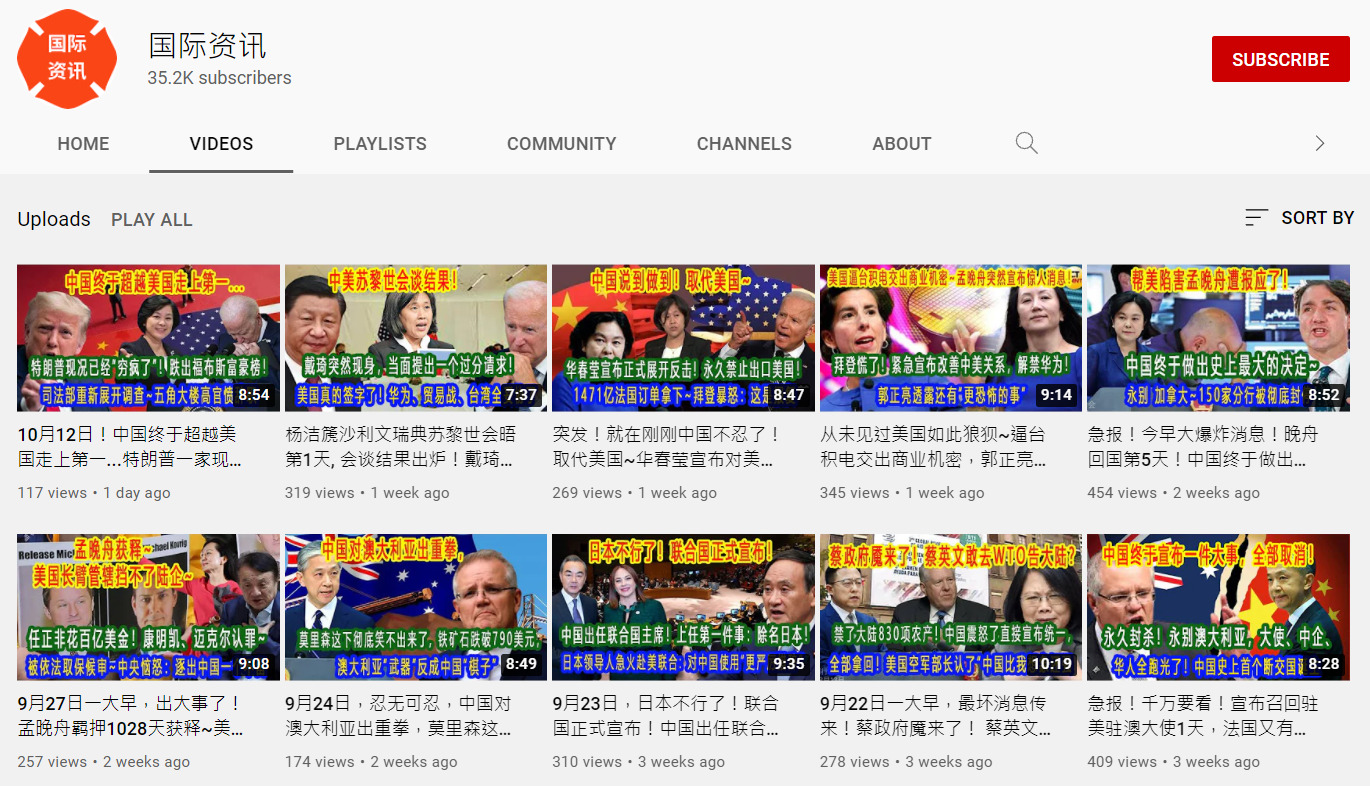

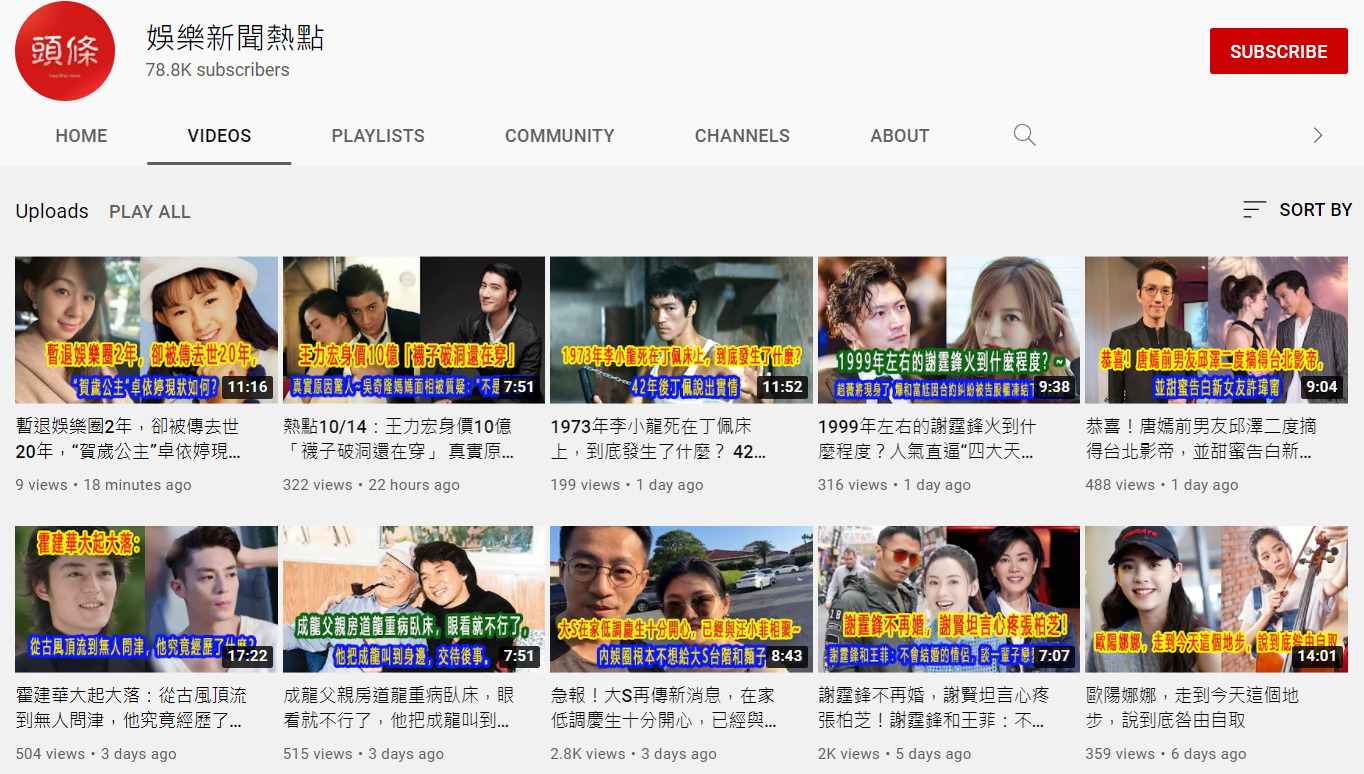

The second advantage is the design of the thumbnail and clickbait title. Appendix A2 shows screenshots of the front page of all puppet-anchor YouTube channels on October 10, 2021. All eight of the YouTube channels that appear in this study have similar thumbnails that feature the following: photoshopped pictures of influential people from China, Taiwan, and sometimes a western country, such as the United States or Australia, as well as attention-grabbing headers with provocative titles in red, yellow, green, or blue. This phenomenon is known as clickbait, or the use of psychological methods to capture the attention of viewers resulting in a click on the title, which would then lead to the puppet-anchor video. There are two easily identifiable aspects of clickbait: the use of provocative syntax and diction as well as the use of eye-catching images to draw the attention of viewers (Blom and Hansen 2015; Chen, Conroy, and Rubin 2015; Bazaco, Redondo, and Sánchez-García 2019). The provocative aspect may take advantage of viewers’ natural inclination to want to satisfy their curiosity (Loewenstein 1994; Lu and Pan 2021; Potthast et al. 2018). The clickbait titles purport to offer information that the viewer does not already have, and this prompts the viewer to want to click to fill the information gap.

These thumbnails follow this method exactly. Almost all of the videos include clickbait titles such as: “must read today! (本日必看)”, “cannot miss this! (不能錯過)”, “the true story! (真相)”, “US surrender! (美國軟了)”, “Tsai surrenders! (蔡英文軟了)”, and “Just Happened! (大事剛發生!)”. Clickbait often includes misleading, sensationalized language in the hope of creating an emotional response that causes the viewer to click on the item (Wu et al. 2020). Words such as “must,” “cannot miss,” and “surrenders” are examples of this tactic in the example titles above, further demonstrating the use of clickbait to increase engagement.

The third advantage is that the presence of a puppet anchor may enhance the appearance of legitimacy of misinformation and may help creators target a particular group (i.e., the elderly). The content behind these videos is mostly from China Times, a China-affiliated news agency in Taiwan (Kao 2021; T.-L. Wang 2020). In a 2019 survey of journalists and editors in Taiwan, 60% chose China Times as the news source they would be least likely to read (Hsu 2019). However, the use of puppet anchors transformed content from China Times to a news-program-like format, with an anchor, stage, and transcript, which may persuade the audience to believe that it is a kind of independent media. Therefore, it may serve to enhance the seeming legitimacy of its source.

The last advantage of the puppet-anchor approach is how difficult it is to detect. Literature on deepfake detection focuses on facial features (Mittal et al. 2020) or the distinct level of resolution of the face (Li and Lyu 2018). For these puppet anchors, however, part of their face is covered, and the resolution of the videos may not be downgraded. Machine-generated voices are used widely in many other types of videos, such as political mockery clips.

Future research agenda on puppet anchors and YouTube propaganda

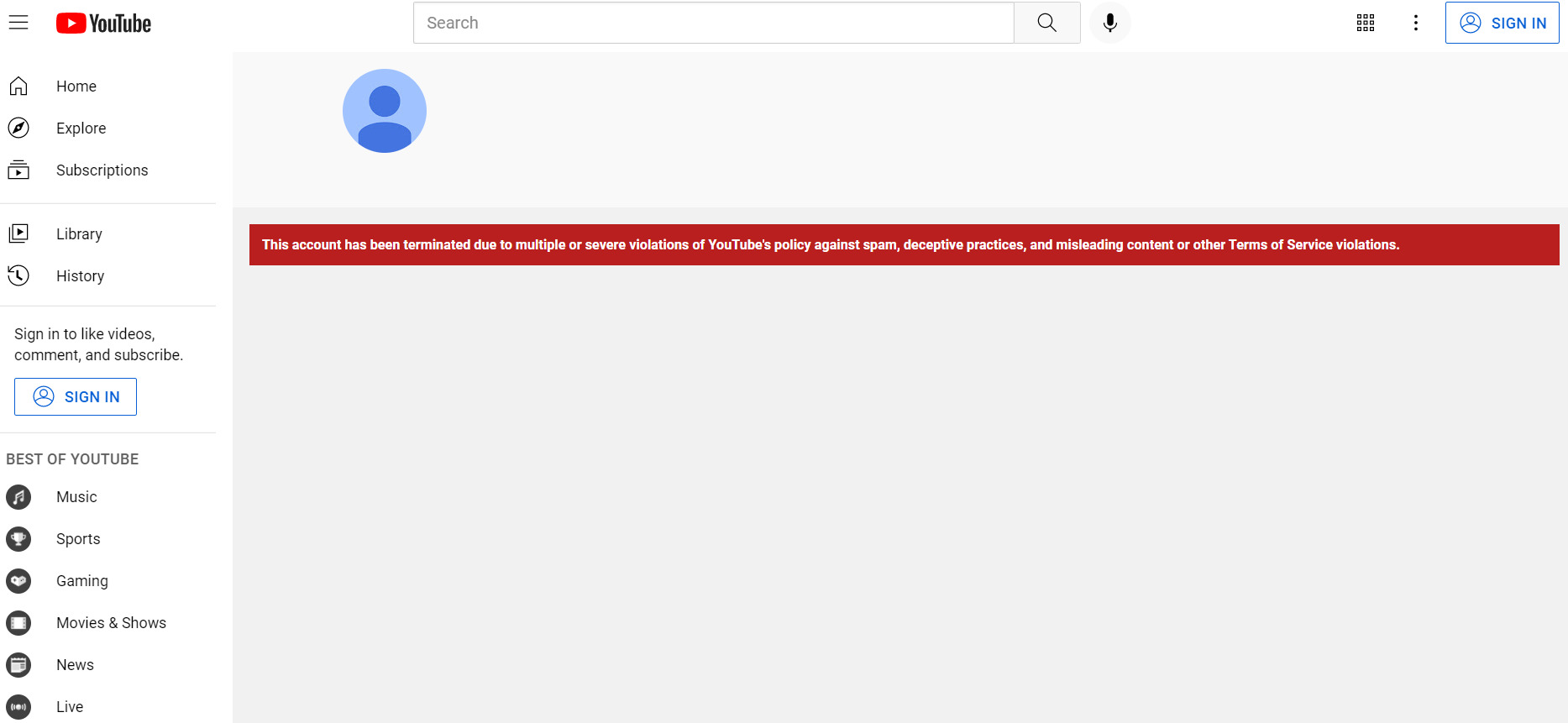

The difficulty of puppet anchor detection is evidenced by the response from YouTube. On October 21, 2021, the authors of this article received a letter from YouTube stating that they had noticed the existence of puppet anchors and deleted three channels reported by one author of this article. YouTube reviewed the content on these channels and confirmed that they violated its misinformation policy.[2] Nevertheless, YouTube failed to find the other five channels and invited the author to provide a full list. After the list was provided, the remaining five channels were terminated on October 25th for “multiple or severe violations of YouTube’s policy … .” One screenshot of the decision to terminate is shown in Appendix A3. YouTube’s decision to terminate the eight puppet-anchor channels supports the major supposition of this article that the puppet-anchor channels focused on the coordinated spread of misinformation. However, the request from YouTube to our author also demonstrates the inherent difficulty in detecting puppet-anchor videos.

This article only applied descriptive analysis to the text/audio content of these videos. However, the layout and the design of the videos may contain additional information and future research is needed in this area.

Given the low-cost, attention-catching, easy-to-spread, hard-to-detect nature of these videos and the potential for auto-generation, it is highly probable that puppet-anchor channels will reemerge in the future and across different languages. We have already observed the reappearance of additional puppet-anchor channels on YouTube after the first eight channels were taken down.[3] It is also possible that we will see different types of puppet anchor-videos targeting different groups within the broader YouTube audience in the future. Future work in studying puppet anchors could focus on their linkage to deepfake, cross-platform coordination, and content-detection.

Corresponding Author

Austin Horng-En Wang, austin.wang@unlv.edu

Department of Political Science, University of Nevada, Las Vegas